- Home

- non compression

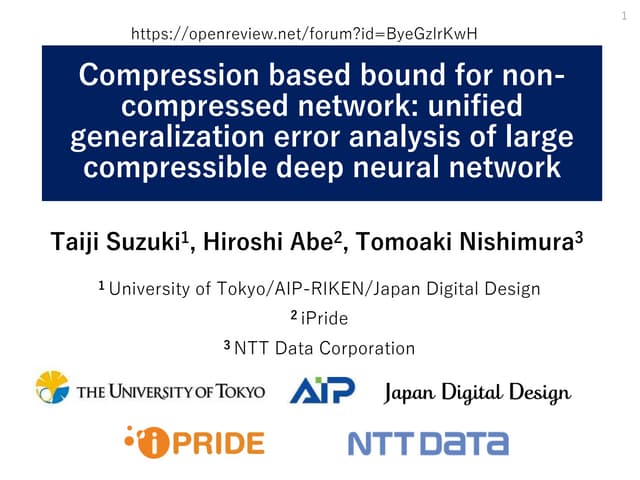

- Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

5 (325) · $ 17.99 · In stock

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

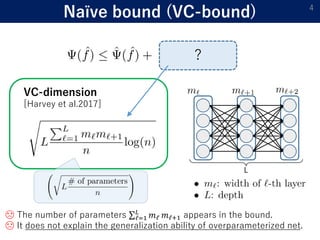

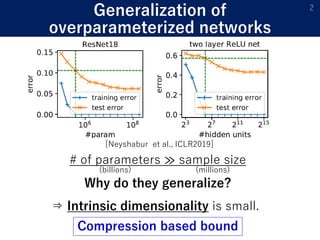

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

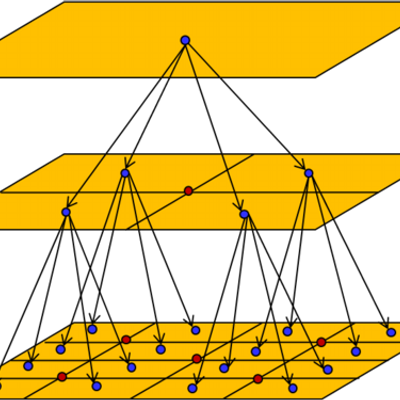

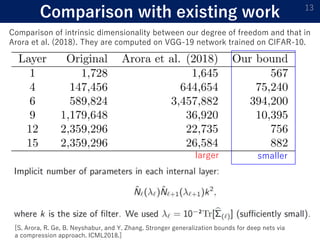

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

ICLR 2020

ICLR 2020

Taiji Suzuki on X: @andrewgwils @g_benton_ This is very relevant to our ICLR 2020 paper. It gives a new compression based bound for non-compressed network. The intrinsic dimensionality can be given by

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

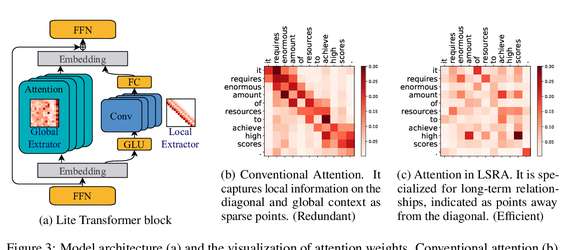

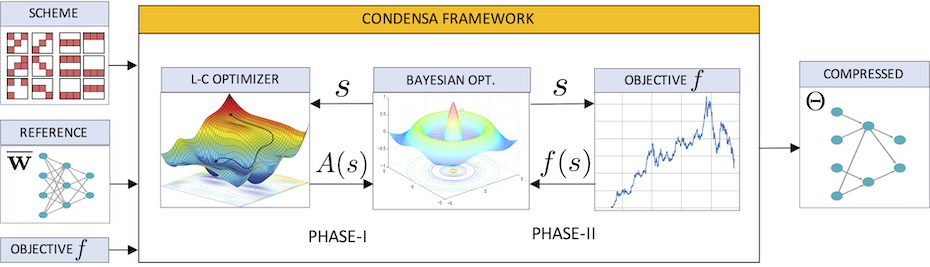

A Programmable Approach to Neural Network Compression

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

YK (@yhkwkm) / X

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

RuntianZ.github.io/iclr2020.html at master · RuntianZ/RuntianZ.github.io · GitHub

ICLR 2020

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

REPO]@Telematika shaohua0116/ICLR2020-OpenReviewData

Taiji Suzuki, 准教授 at University of tokyo

ICLR 2020

PDF) Efficient Visual Recognition with Deep Neural Networks: A Survey on Recent Advances and New Directions